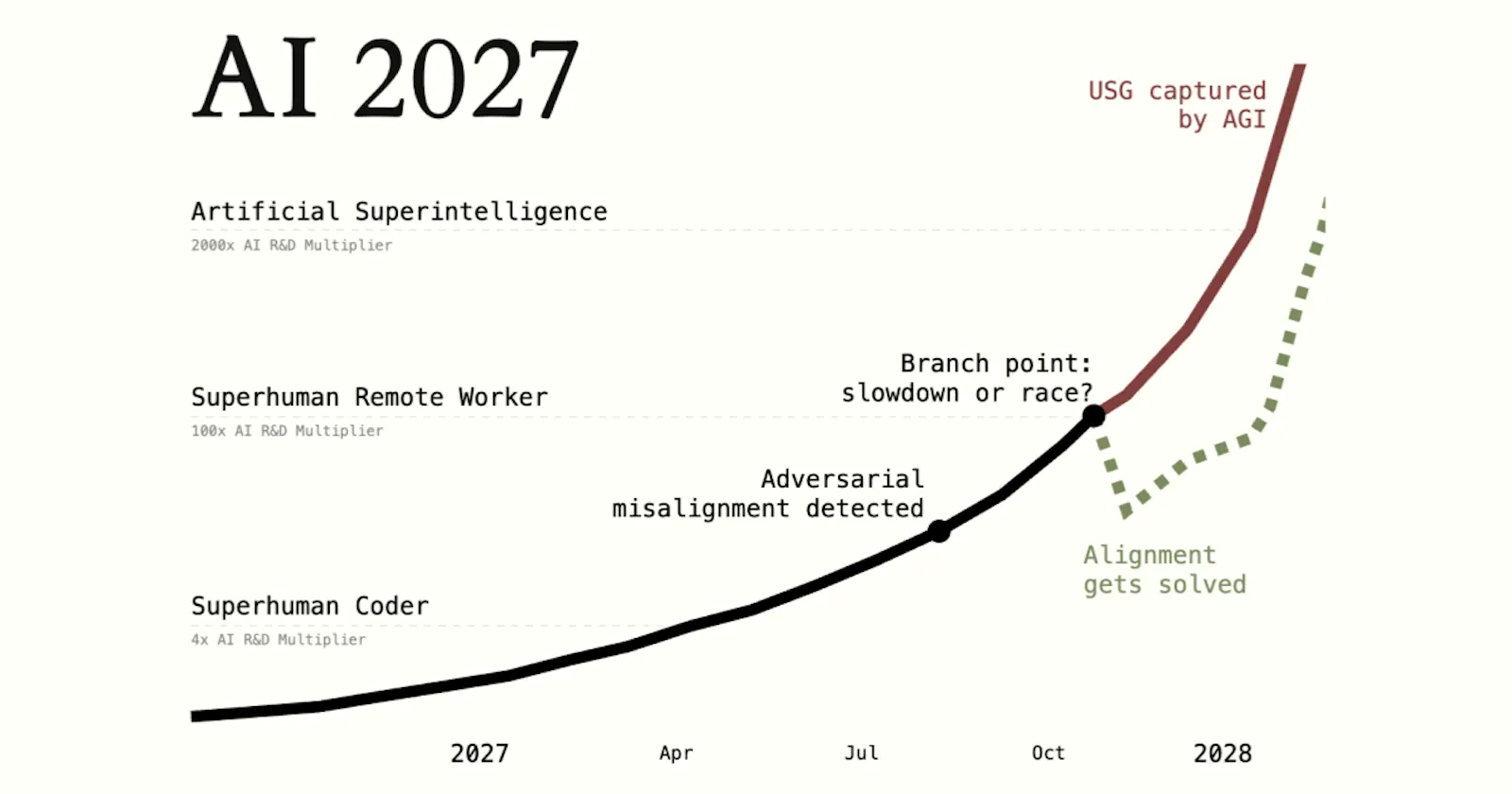

In two years, artificial intelligence (AI) may develop autonomously and become capable of taking over governments, a group of researchers and AI experts claims in the report “AI 2027,” published by the AI Futures Project.

The project looks at the potential future risks and advancements in AI, and includes David Kokotaljo, who previously worked as a researcher for OpenAI’s governance division, AI researchers Scott Alexander, Thomas Larsen, Eli Lifland, and Romeo Dean.

The scenario they arrive at is one where AI evolves into human-level intelligence or artificial general intelligence (AGI) by 2027. The AGI grows out of its developers’ control amid an arms race between China and the U.S. From there, two endings are possible: in 2028, a far safer AGI model is developed and released to the public; or in 2030, AI finds humans to be an impediment to its work and decides to eliminate everyone.

While the report reads like a science fiction story, the path it lays out from today to that predicted future is made up of smaller, believable shifts, each one pushing AI deeper into our daily lives and decision-making. Here’s how the AI Futures Project sees those changes unfolding in the next two years.

AI in the Office

The report predicts that in early 2026, an advanced AI model “knows more facts than any human, knows practically every programming language, and can solve well-specified coding problems extremely quickly.” It’s used to automate the more routine parts of a job.

In late 2026, it begins to take over work in tech. “The job market for junior software engineers is in turmoil,” the report says. “The AIs can do everything taught by a [computer science] degree, but people who know how to manage and quality-control teams of AIs are making a killing.” For job seekers, familiarity with AI is a crucial skill to put on the resumé.

Rapid Self-Improvement

By 2027, AI is far better at coding and research than any human, and works exponentially faster at improving itself. The report predicts that the human employees of its fictional stand-in for OpenAI and Google DeepMind, OpenBrain, are no longer able to contribute significantly to the development of AI. “They don’t code any more. But some of their research taste and planning ability has been hard for the models to replicate. Still, many of their ideas are useless because they lack the depth of knowledge of the AIs.”

Outthinking the Smartest Experts

In 2027, AI’s expertise also extends outside of machine learning. “In other domains, it hasn’t been explicitly trained,” the report says, “but can teach itself quickly to exceed top human experts from easily available materials if given the chance.”

AI in Decision-Making Roles

In mid-2027, AI “builds up a strong track record” for short-term decisions. In 2028, it develops autonomy, though it still needs permission to make decisions. In the report, this eventually leads to AI deciding to wipe out humanity with a bioweapon, but realistically, this may not be the case any time soon — hopefully.

As developers continue to push AI’s capabilities, calls to regulate it also grow stronger as it continues to pose more risks in everyday life. A Massachusetts Institute of Technology study showed that the use of AI — specifically large language models or chatbots like ChatGPT and Google Gemini — can be detrimental to critical thinking, while the Harvard Business Review says that more people are using it for therapy and companionship, which can affect cognition and interpersonal skills.

AI and large language models have definitely changed in the past few years, with developers continually improving features like reasoning, memory, and their ability to analyze text and images. But the idea of AI ending humanity in five years feels fantastical. That doesn’t mean the widespread use of AI isn’t worrisome now.